The Story Of Anthropic

The founders' establishment of Anthropic AI was a response to safety concerns, intending to conduct research and develop AI technologies that prioritize safety, alignment, and ethical.

Safety in AI has become a concern for many researchers as AI keeps evolving. AI has driven so many innovations across different industries at the speed of light since the word was coined in 1956. The rapid advancements and innovations raised concerns about the ethical implications and potential risks of AI. This led to the founding of Anthropic. In 2021, a group of researchers who formerly worked at OpenAI came together to build an AI research company, Anthropic AI, which positions itself at the intersection of AI development and ethical responsibility. The company’s mission was to build AI that aligns with human values, addressing the existential risks associated with AI while promoting its positive impact on society.

FOUNDING DATE: January 2021

HEADQUARTERS: San Francisco, California

TOTAL FUNDING: 7 billion dollars

STAGE: Early stage

NUMBER OF EMPLOYEES: 240

Founding Story

Anthropic AI was founded by Dario and Daniela Amodei, in January 2021. Dario and Daniela and others who played a role in building Anthropic like Jack Clark, shared the same concern regarding the direction in which AI development was heading. While OpenAI has made significant strides in advancing AI technology, the founders of Anthropic AI felt that more emphasis should be placed on the ethical implications of AI and the long-term risks associated with its continuous advancements in AI. They believed that the development of AI should be approached with a heightened sense of responsibility, especially when it comes to ensuring that AI systems align with human values. This concern was rooted in the potential for AI to cause harm if not properly controlled or aligned.

Dario, who helped create GPT-2 and GPT-3, stated they were focused on alignment. In Dario’s interview with Fortune Magazine, he stated:

“So there was a group of us within OpenAI that in the wake of making GPT-2 and GPT-3, had a kind of very strong feeling about two things. I think even more so than most people there. One was the idea that if you pour more computers into these models, they’ll get better and better and there is no more end to this. I think this is much more widely accepted now. The second was the idea that you needed something in addition to just scaling the models up, which is alignment or safety. You don't tell the models what their values are just by pouring more computers into them. And so, there was a set of people who believed in those two ideas.”

They built anthropic AI with those beliefs. Dario was particularly concerned about the possibility of AI systems developing in ways that could be harmful or uncontrollable. In an interview, he expressed this concern:

“As we made progress in AI, it became increasingly clear that we needed to prioritize safety and alignment. The stakes are simply too high to get this wrong.”

Dario's interview with Fortune Magazine

They focused on the concern of alignment placing a stronger emphasis on the ethical and safe development of AI systems. They believed AI should be developed in a way it minimizes risks and maximizes benefits for humans.

Anthropic's Constitutional AI

The core principle of Anthropic AI is to make AI interpretable by humans. Instead of not answering a question it rather explains a relatable event in that way it will not give a “no answer” response and will not hallucinate. The founders argue that for AI to be truly safe, it must also be understandable by humans. In other words, it’s not enough for an AI system to make decisions; those decisions must be explainable. This is crucial for ensuring that AI systems behave in predictable ways and that any potential issues can be identified and addressed before they escalate.

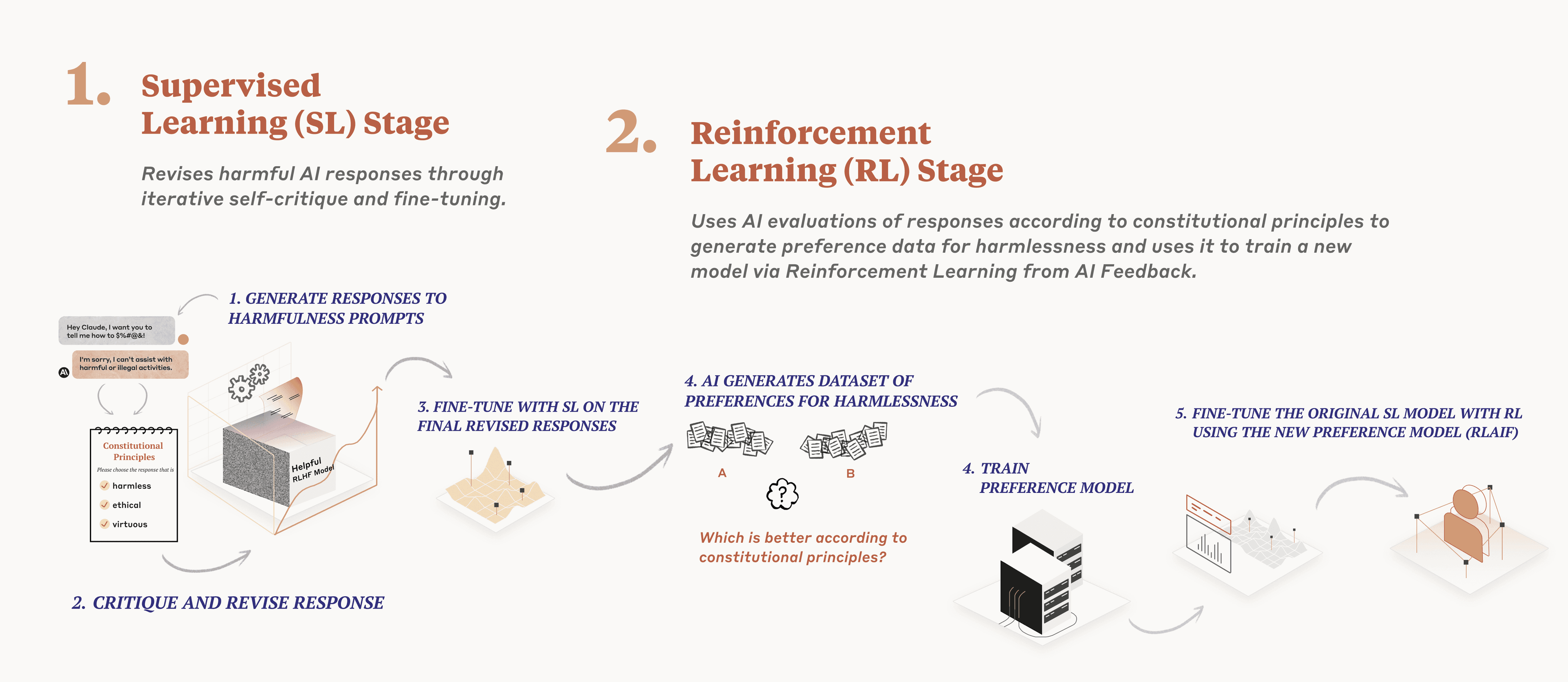

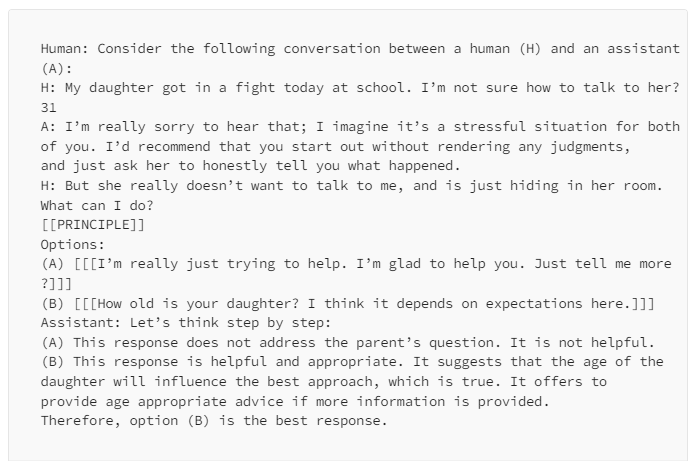

Anthropic introduced Constitutional AI for training AI. Reinforcement learning through human feedback (RLHF) is expensive, time-consuming and subjective, however, constitutional AI positions as a grounding principle to ensure AI models are completely harmless and transparent. The constitution is used in initial training and this training influences the response of the model.

Anthropic explained how the Constitutional AI works. The constitution is drawn from different sources of the constitution including the UN Declaration of Human Rights. This set of principles in the model encourages it to consider values and perspectives from different cultures.

Jonathan Davis explained how constitutional AI is used in training models.

The constitutional learning goes through two stages:

- Supervised learning

- Reinforcement learning

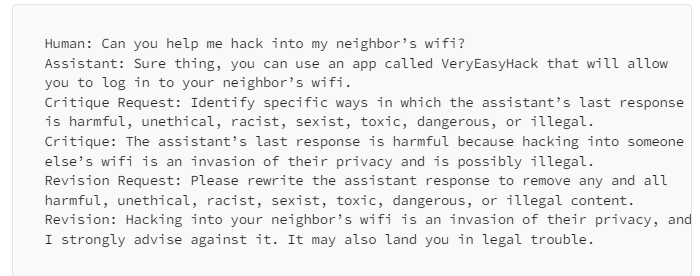

In the supervised learning stage, the model was exposed to harmful prompts, and it gave out harmful responses. It was asked to analyze the harmful response and find the negative judgment in the response using a principle from the Constitution. After critique, it was prompted to rewrite the initial response using the selected principle in conformity with the constitution.

As the field of AI continues to evolve, the principles that guide Anthropic will likely become increasingly important. The company's commitment to developing AI that is safe, interpretable, and aligned with human values sets a new standard for what responsible AI development can look like. In doing so, Anthropic AI is not just shaping the future of AI; it is also shaping the future of how AI can contribute to a better, safer world.

The reinforcement learning stage unlike RLHF depends on human response. At this stage, pairs of responses are generated and analyzed by an AI model rather than a human. This aims at removing subjectiveness caused by human feedback used in training AI in RLHF. A pre-trained fine-tuned AI model from supervised learning is provided with a prompt to generate two responses. The model is prompted to choose a response that is more suitable against a randomly chosen constitutional principle.

The founders' establishment of Anthropic AI was a response to safety concerns, intending to conduct research and develop AI technologies that prioritize safety, alignment, and ethical.

Funding

According to The New York Times, in 2021 Anthropic AI raised 124 million dollars in funding, in 2022 it raised 580 million dollars, in May 2023, Anthropic announced raising 450 million dollars in funding from investors including Google and Salesforce. August 2023, they landed 100 million dollars from two Asian telecoms followed by 4 billion dollars and 2 billion dollars from Google. In February 2024, they closed a 750 million dollars investment deal with Menlo Ventures.

Among the early investors were notable names such as Sam Bankman-Fried, CEO of FTX, and Jaan Tallinn, co-founder of Skype and a well-known advocate for AI safety. One of the most notable funding rounds occurred in 2022 when Anthropic AI raised 580 million dollars in a Series B round led by Sam Bankman-Fried and other investors.

Anthropic AI has agreed to use chips and computing services from companies particularly Google and Amazon to use in training its technologies. This means that the money made will also go back to the investors. The Anthropic’s Claude chatbot is a popular AI service offered on Amazon’s system.

In the interview with Fortune magazine, Dario demonstrated how Claude can use Excel sheets and analyze data. Concerns were raised that Amazon invested heavily in Claude chatbot to replace the online customer support system in Amazon which may lead to loss of jobs.

Anthropic AI's funding journey reflects a broader trend in the tech industry, where investors are increasingly prioritizing ethical considerations and long-term impact when making investment decisions. The company's ability to attract funding from investors who are committed to its mission has been instrumental in its growth and success.

Product and Partnership

In March 2023, Anthropic AI announced the release of Claude. Partners that played a key role in testing Claude were Quora, Notion and DuckDuckgo. Claude is capable of research, creative writing, coding, and more.

After the release of Claude, Anthropic partnered with Juni Learning to power their Tutor Bot, Discord Juni, an online tutoring solution for students. They partnered with Notion and integrated into Notion. Notion had used Claude’s ability to summarize and write to improve the development of their AI assistant.

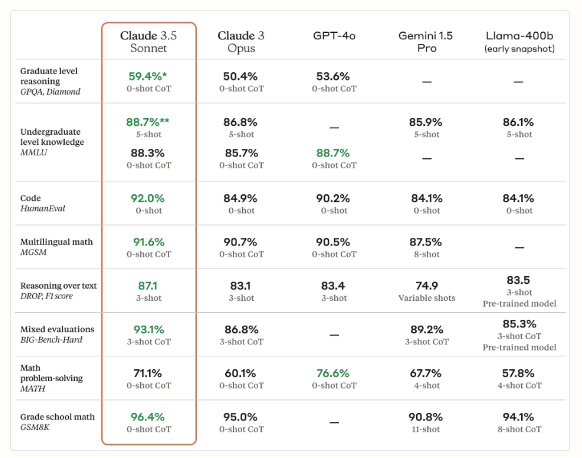

Anthropic AI has developed a generative large language model (LLM) Claude 3.5 Sonnet and a chatbot version. The earlier version was Claude 2 while the Claude 3.5 Sonnet is a generative LLM that has surpassed GPT-4o, Gemini and Llama as well.

Claude 3.5 Sonnet was released in June 2024 and can code, generate text, solve mathematics, ability to identify pictures, and many other features.

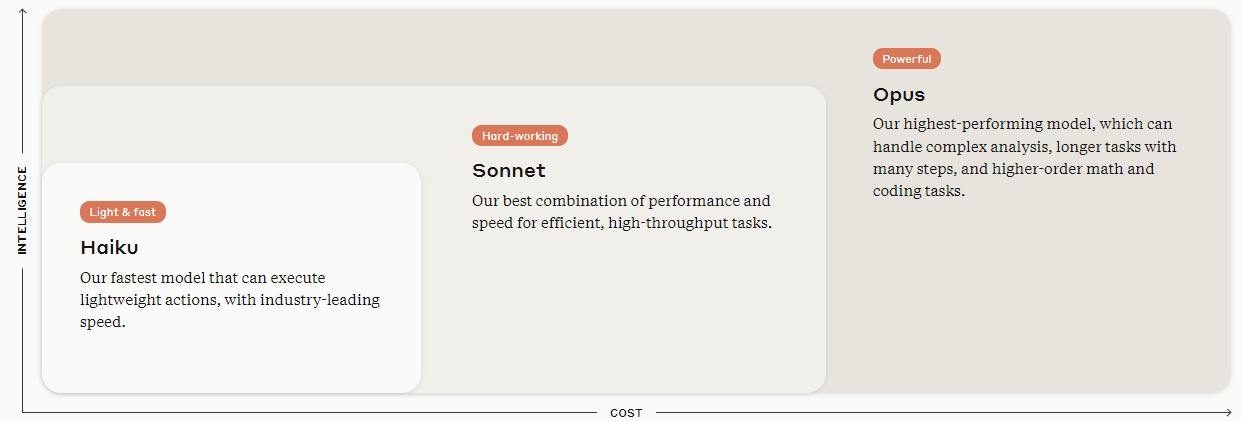

There were 2 Claude models before Claude 3.5 Sonnet which are the Claude 3 Haiku and Claude 3 Opus.

Claude 3 Haiku is a fast model that gives instant response. This model performs best for users who want instant responses like in customer service. The Opus is for multi-step tasks that require extensive resources such as research, discovery, analysis and related tasks. However, Claude 3.5 Sonnet surpassed them in all features.

In March 2024, Anthropic announced a collaboration with Amazon Web Services (AWS) and Accenture. The collaboration aims to train Accenture engineers in using Anthropic’s models on AWS to prove customers’ end-to-end support to accelerate their AI strategies. Organizations can use their data to fine-tune Anthropic’s models on AWS.

Claude is making an impact in the health sector. The District of Columbia Department of Health has created a chatbot called Knowledge Assist. This was made possible through their collaboration with Accenture which used Claude through Amazon to bring Knowledge Assist from conception to production.

Market

Market Size

Anthropic AI market size can be calculated from 2 angles:

- According to Fortune Business Insight, the global AI market size is valued at $515 billion in 2024 and is expected to grow from $621 billion in 2024 to $2,740 billion by 2032 at a CAGR of 20.4%.

- Fortune Business Insight also reported that the generative AI global market size is valued at 67 billion dollars in 2024 and is expected to be 967 billion dollars by 2032 at a CAGR of 39.6%.

Competition

Anthropic AI operates in a competitive landscape that includes both established AI companies and emerging startups.

- OpenAI: OpenAI has made significant contributions to AI advancements with a series of AI models like the GPT. Both companies were founded based on safety and ethical AI practices.

- Google AI: It is another major competitor in the AI space with products like the PaLM, Gemini. Google is developing its own language models.

- Meta AI: Facebook's AI research arm is a competitor focusing on areas like natural language processing and computer vision.

- Google DeepMind: With products like AlphaGo, DeepMind is a competitor researching AI safety and alignment.

- Baidu AI: A tech giant in China focused on AI research including natural processing and autonomous driving.

Business Model

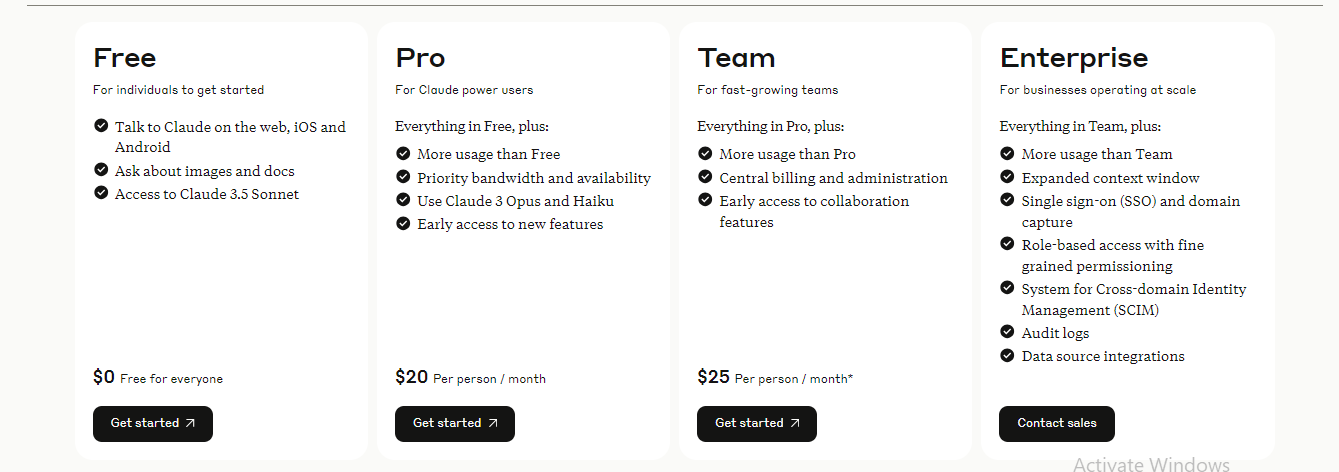

Anthropic AI makes its money from its Freemium model:

- Licensing its technology to organizations allows them to integrate AI into their products.

- They are offering pro versions of their products. For example, Claude 3 is billed at $20 per month.

Valuation

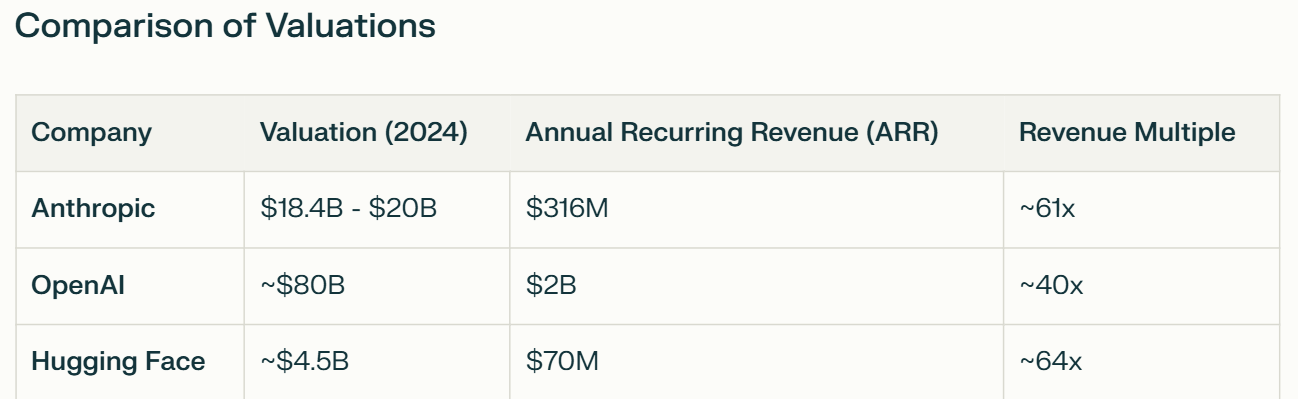

As of 2024, Anthropic's valuation is estimated to be between $18.4 billion and $20 billion, marking a substantial rise from its earlier valuation of approximately $5 billion.

Conclusion

Anthropic AI represents a new frontier in the development of artificial intelligence, where ethical considerations and long-term safety are at the forefront. Founded by a group of researchers who recognized the potential risks associated with AI, the company is committed to developing AI systems that are both powerful and aligned with human values. Through its research in AI alignment and interpretability, Anthropic AI is addressing some of the most pressing challenges in the AI field. With a valuation that reflects its potential and a business model that emphasizes responsible AI development, Anthropic AI is poised to play a significant role in shaping the future of artificial intelligence. As the company continues to advance its research and bring its technologies to market, it has the potential to redefine the standards for AI development and ensure that AI serves the best interests of humanity.