Alibaba's EMO AI System Sets New Standard in Video Generation

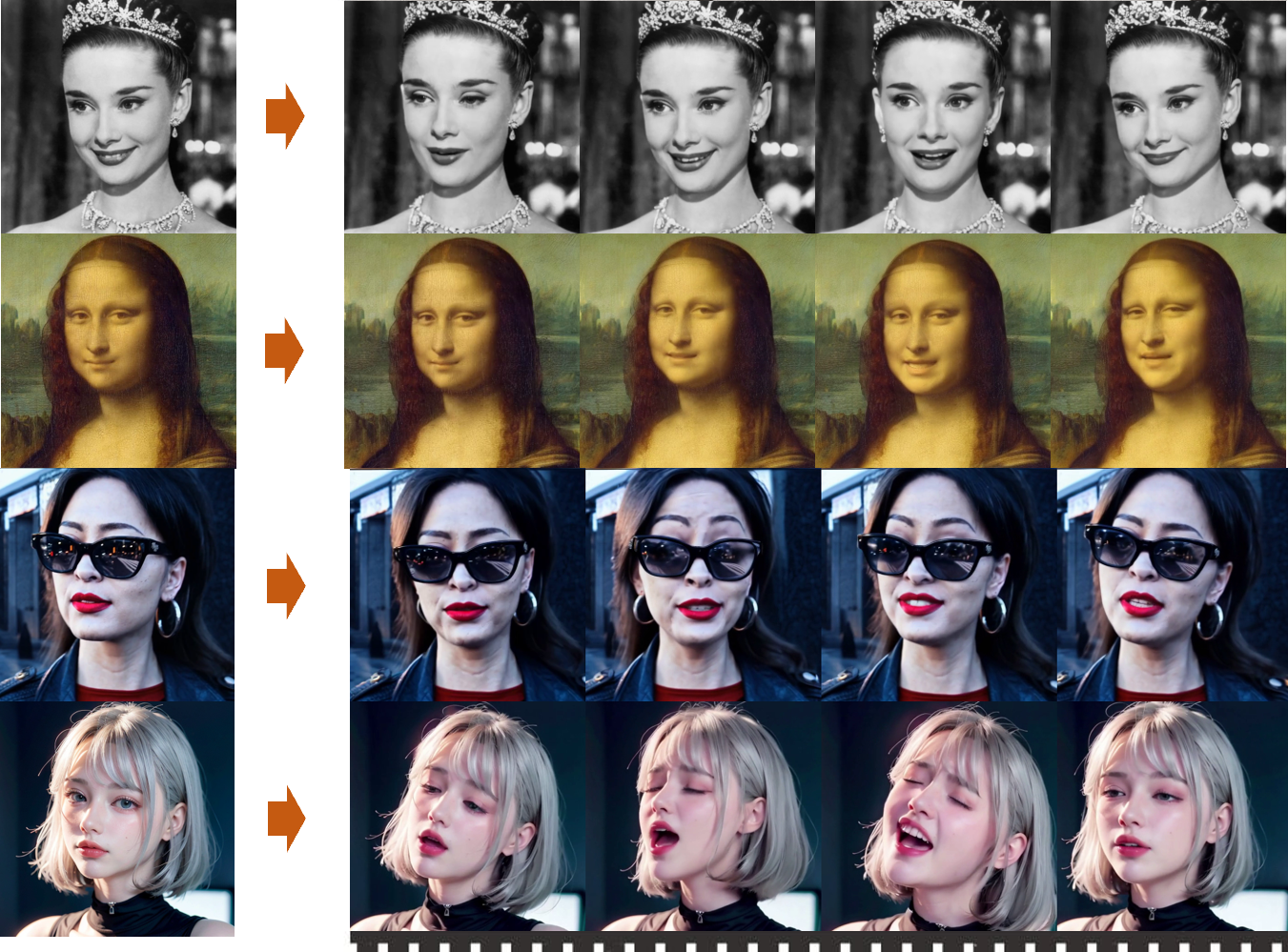

The system's versatility extends beyond conversational videos, with EMO capable of animating singing portraits with synchronized mouth shapes and evocative facial expressions.

Alibaba's latest innovation, EMO, is revolutionizing the world of AI by enabling the generation of highly expressive and realistic "talking head" videos from a single reference image and an audio file. Alibaba's Institute for Intelligent Computing has introduced artificial intelligence system called "EMO," an acronym for Emote Portrait Alive, capable of animating single portrait photos and generating videos of individuals talking or singing with astonishing realism.

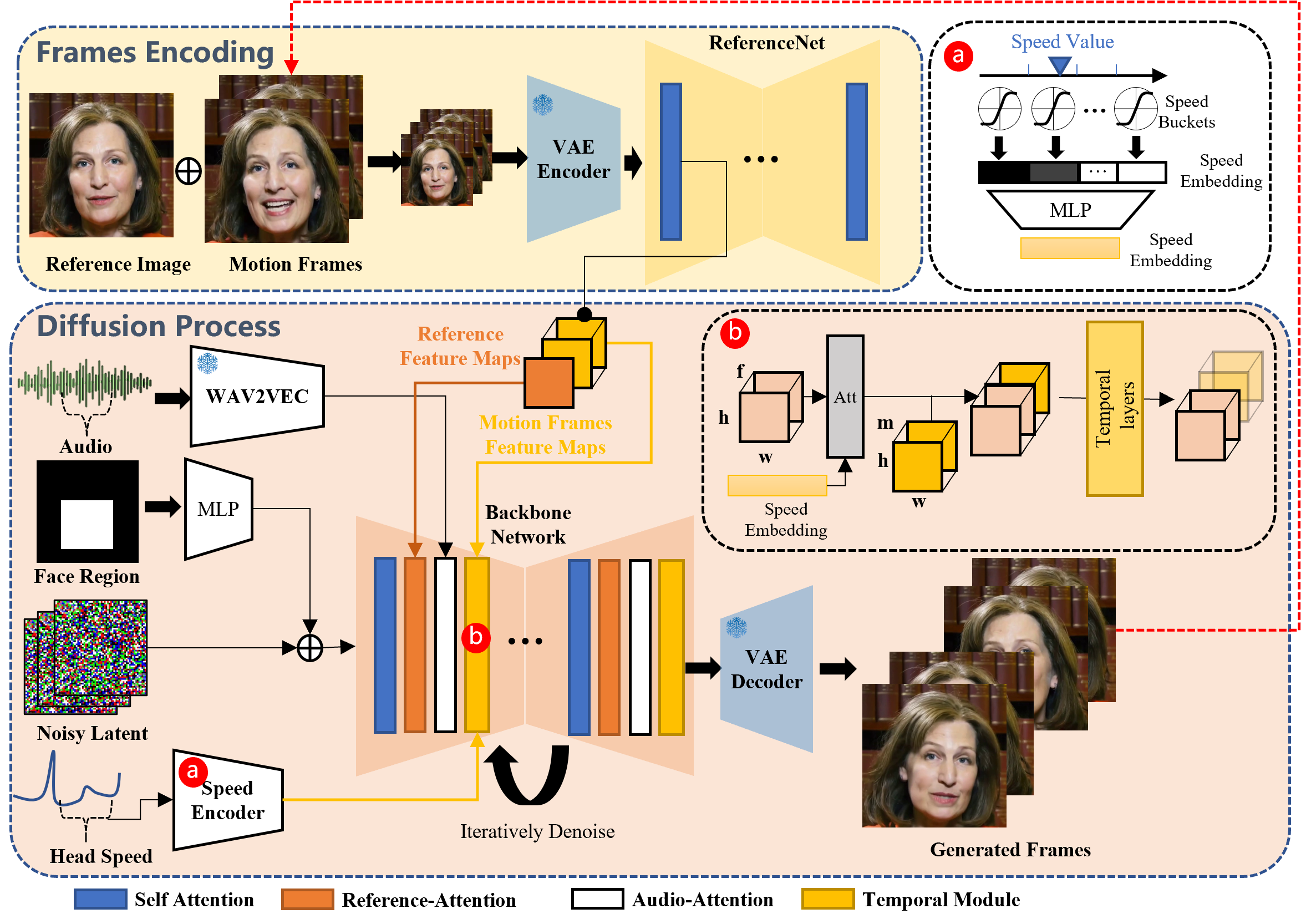

Published in a research paper on arXiv, the EMO system represents a significant breakthrough in audio-driven talking head video generation. Lead author Linrui Tian explains that traditional techniques often struggle to capture the full range of human expressions and individual facial styles. To address these limitations, EMO utilizes a novel framework that directly synthesizes video from audio input, bypassing the need for intermediate 3D models.

Powered by a diffusion model AI technique and trained on a dataset comprising over 250 hours of diverse talking head videos, EMO excels in generating lifelike facial movements and head poses that accurately synchronize with the provided audio track. Unlike previous methods relying on 3D face models or blend shapes, EMO converts audio waveforms into video frames directly, enabling it to capture subtle motions and identity-specific nuances inherent in natural speech.

Experimental results detailed in the paper demonstrate EMO's superiority over existing methods in terms of video quality, identity preservation, and expressiveness. Moreover, user studies confirm the perceived naturalness and emotiveness of EMO-generated videos compared to alternatives.

While some limitations may exist, such as artifacts, EMO represents a significant leap forward in mapping audio directly to facial motions. It exemplifies AI's potential to unlock greater expressiveness in synthesized human video, pushing the boundaries of what's possible in AI-driven content creation.