OpenAI Unveils o1, A New AI Model Focused On Self-Fact-Checking And Advanced Reasoning

The standout feature of o1 is its ability to "think" before answering, improving its performance in complex, multi-step tasks like legal analysis and coding.

OpenAI has launched its latest AI model family, OpenAI o1, which brings new features aimed at improving factual accuracy and reasoning abilities. The model was officially introduced under the codename Strawberry and is available in two versions: o1-preview and o1-mini. These models are accessible via the ChatGPT Plus and Team subscriptions, with broader access for free users planned soon.

The defining feature of the o1 models is their ability to self-fact-check, which sets them apart from OpenAI’s previous models like GPT-4o. The o1 models can "think" before responding, spending more time evaluating all parts of a query. This process makes them particularly effective at handling complex, multi-step tasks such as legal brief analysis and code generation and enhances their ability to synthesize information.

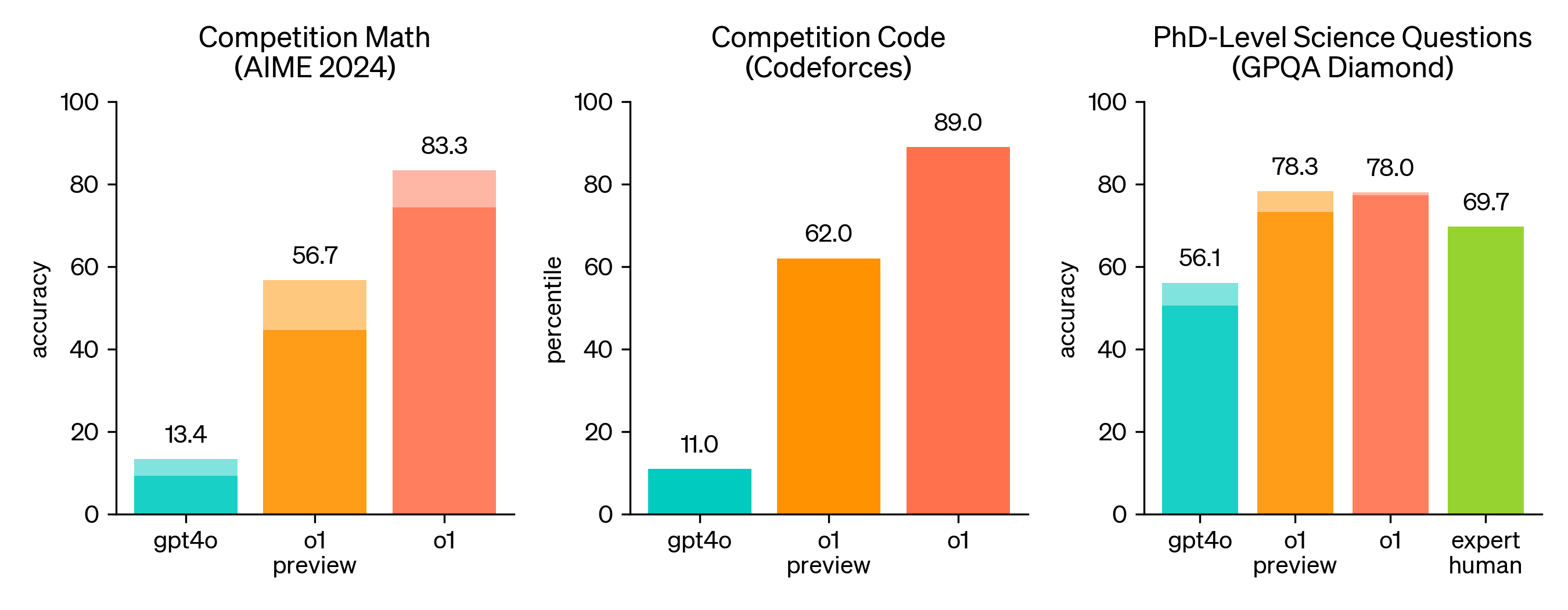

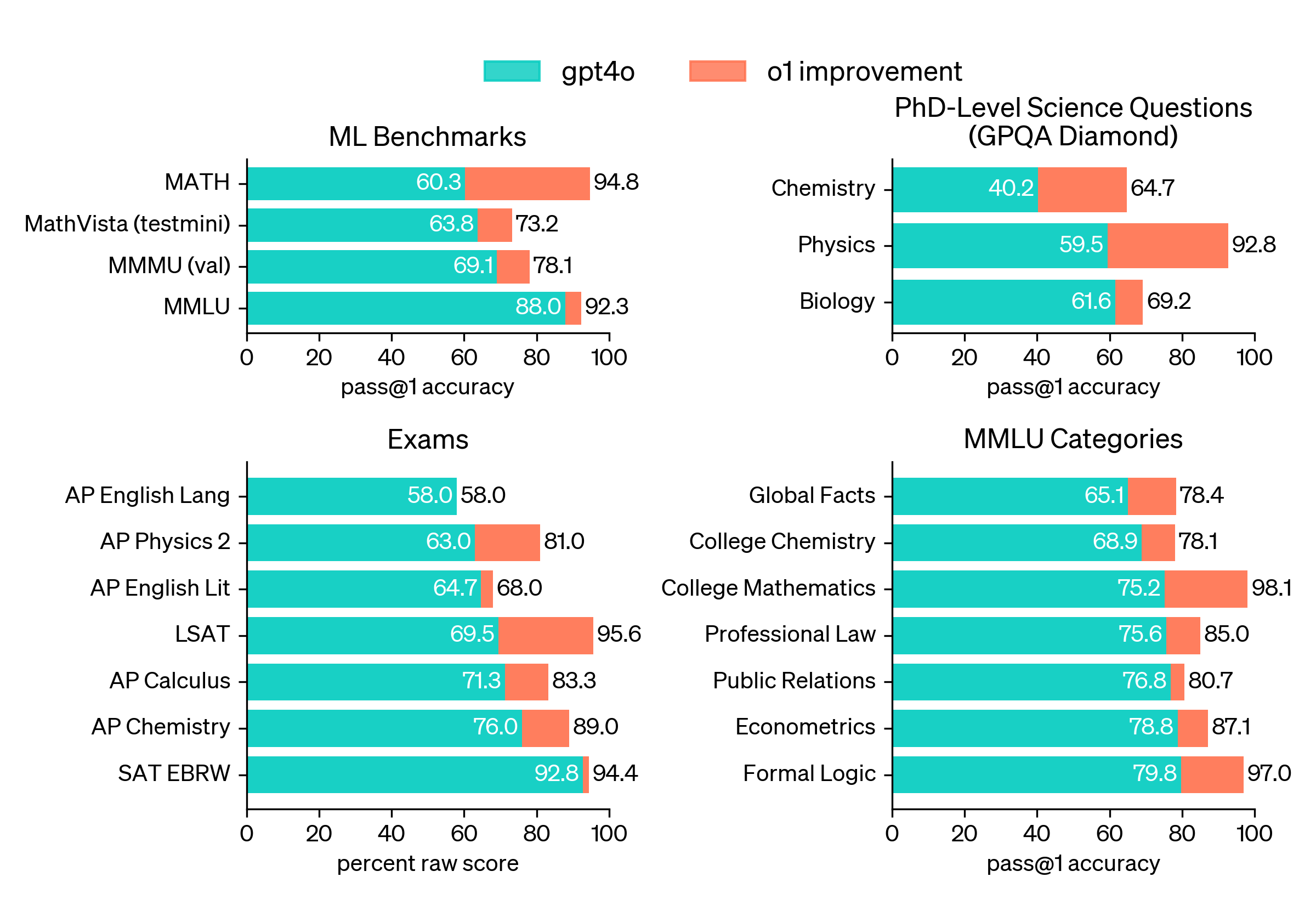

According to OpenAI, the new models avoid common reasoning errors that often challenge generative AI systems. The company claims that o1 performs significantly better in areas such as data analysis, scientific reasoning, and programming. In tests, the model excelled at tasks like solving math problems and analyzing legal documents. For example, in a qualifying exam for the International Mathematical Olympiad, o1 successfully solved 83% of problems, compared to just 13% solved by GPT-4o.

Despite its advanced capabilities, o1 has limitations. It is notably slower in processing responses, sometimes taking over 10 seconds to answer certain questions. It’s also expensive, costing $15 per 1 million input tokens and $60 per 1 million output tokens—a much higher price than its predecessors. Additionally, early testers have reported occasional hallucinations, where the model generates false information confidently.