OpenAI's DevDay: Unveiling Powerful New Models and Developer Products

OpenAI kicked things off by unveiling GPT-4 Turbo, the next generation of their popular GPT-4 model.

OpenAI's DevDay event has left tech enthusiasts worldwide buzzing with excitement as they announced a new models and developer products, along with more affordable pricing options. These releases promise to reshape the AI landscape and open up a realm of possibilities for developers and businesses.

We're rolling out new features and improvements that developers have been asking for:

— OpenAI (@OpenAI) November 6, 2023

1. Our new model GPT-4 Turbo supports 128K context and has fresher knowledge than GPT-4. Its input and output tokens are respectively 3× and 2× less expensive than GPT-4. It’s available now to…

GPT-4 Turbo: AI on Steroids

OpenAI kicked things off by unveiling GPT-4 Turbo, the next generation of their popular GPT-4 model. This powerhouse AI model boasts a whopping 128K context window, enabling it to process more than 300 pages of text in a single prompt. Not only is it more capable, but it's also more budget-friendly, offering a 3x cheaper price for input tokens and 2x cheaper price for output tokens compared to GPT-4. Developers can now try GPT-4 Turbo in preview, with a stable production-ready model on the horizon.

Function Calling Evolves

Function calling capabilities have seen significant improvements. Now, you can instruct the model to perform multiple functions in a single message, streamlining the interaction. This means you can request complex actions with a single prompt, such as "open the car window and turn off the A/C," eliminating the need for multiple back-and-forths with the model. Moreover, GPT-4 Turbo is now more precise in returning the right function parameters.

Sharper Instruction Following and JSON Mode

GPT-4 Turbo shines when it comes to following instructions accurately. Whether you need it to generate content in specific formats, like XML, or require syntactically correct JSON responses, this model delivers. The newly introduced JSON mode ensures that the model's output adheres to the JSON structure. This is especially valuable for developers working with JSON in the Chat Completions API outside of function calling.

Reproducible Outputs and Log Probabilities

OpenAI has introduced a seed parameter that facilitates reproducible outputs, a feature that many developers will find invaluable. It ensures that the model returns consistent results, aiding in debugging, comprehensive unit testing, and maintaining better control over the AI's behavior. Moreover, OpenAI plans to introduce log probabilities for the most likely output tokens in GPT-4 Turbo and GPT-3.5 Turbo, which can be a valuable tool for building features such as autocomplete in search experiences.

Updates to GPT-3.5 Turbo

Alongside GPT-4 Turbo, OpenAI has also released a new version of GPT-3.5 Turbo, supporting a 16K context window by default. This model excels in instruction following, JSON mode, and parallel function calling. Notably, it boasts a 38% improvement in tasks that require specific format generation, like JSON, XML, and YAML.

Assistants API: Elevating AI App Development

OpenAI's Assistants API is a game-changer for developers aiming to create AI-powered applications with specific goals and capabilities. Assistants are purpose-built AIs with custom instructions, extra knowledge, and the ability to invoke models and tools to perform tasks. This API simplifies the process, streamlining high-quality AI app development. Notable tools within the Assistants API include the Code Interpreter, Retrieval, and Function Calling. It introduces persistent and infinitely long threads, offering seamless conversation management without context window constraints.

New Modalities: Vision and Text-to-Speech

GPT-4 Turbo sets the stage for a new era of AI capabilities, with the ability to accept images as inputs in the Chat Completions API. This unlocks potential applications such as generating image captions, detailed image analysis, and document reading. Additionally, developers can now harness text-to-speech capabilities to generate human-quality speech from text, with various voices and model variants available to cater to different use cases.

"In 300 feet, turn left onto Maple Street. Continue on Maple Street for half a mile. Your destination will be on the right. You will arrive at your destination in approximately two minutes."

GPT-4 Fine-Tuning and Custom Models

OpenAI is actively exploring fine-tuning for GPT-4 and is set to launch an experimental access program. However, they acknowledge that achieving significant improvements over the base model is a more challenging task compared to GPT-3.5 fine-tuning. For organizations that demand an even higher level of customization, OpenAI is rolling out a Custom Models program. This program enables selected organizations to work closely with OpenAI researchers to train a custom GPT-4 model tailored to their specific domain.

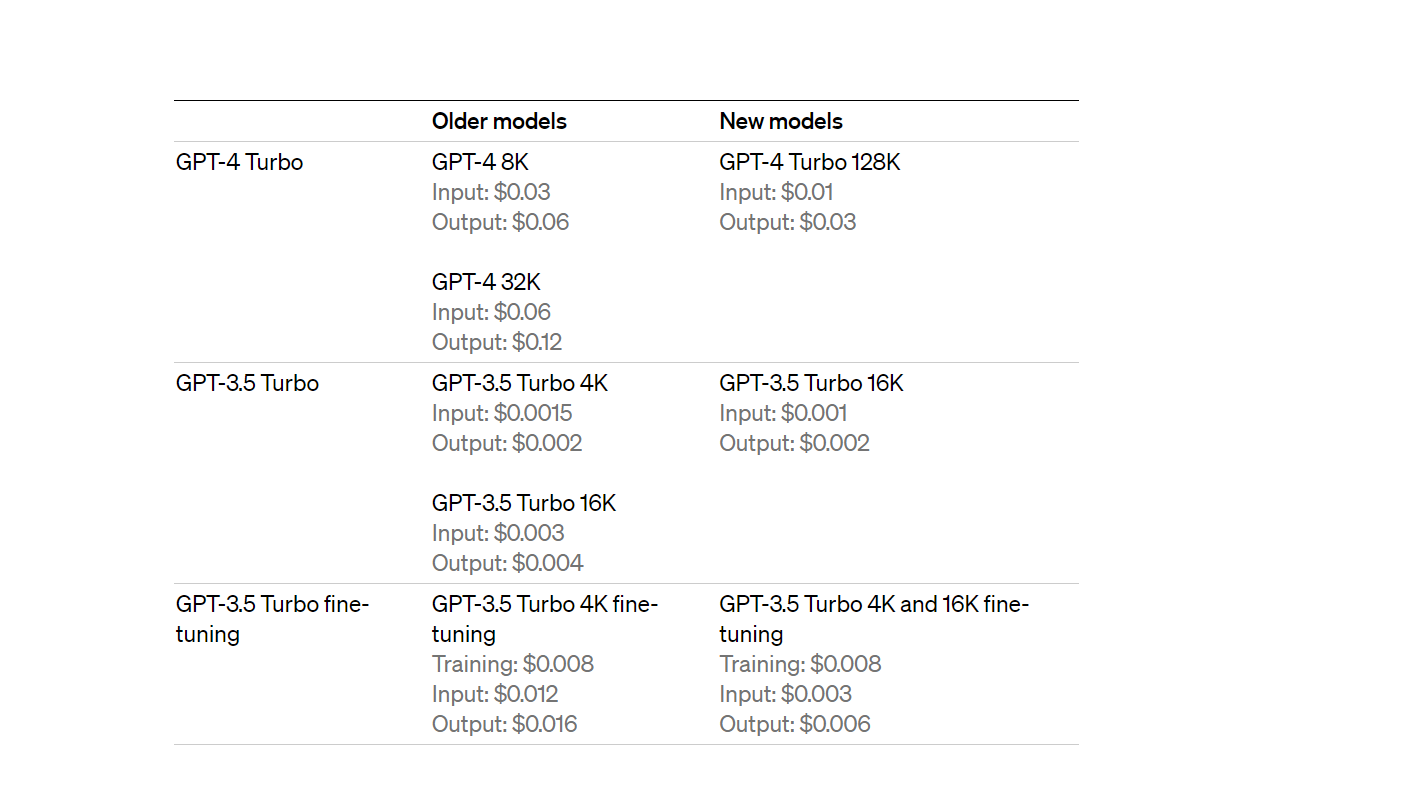

Lower Prices and Higher Rate Limits

OpenAI is committed to delivering cost savings to developers. They've slashed prices across the board. GPT-4 Turbo input tokens are 3x cheaper than GPT-4, and output tokens are 2x cheaper. GPT-3.5 Turbo also sees a significant reduction in prices, with input tokens being 3x cheaper than the previous 16K model. To help developers scale their applications, OpenAI has doubled the tokens per minute limit for all paying GPT-4 customers, ensuring greater flexibility.

Copyright Shield: Legal Protection for Developers

OpenAI is taking steps to protect its customers from potential legal claims related to copyright infringement. With the introduction of Copyright Shield, OpenAI will intervene to defend its customers and cover the costs incurred when they face copyright infringement claims. This is applicable to ChatGPT Enterprise and the developer platform's generally available features.

Whisper v3 and Consistency Decoder

OpenAI is launching Whisper large-v3, an updated version of its open-source automatic speech recognition model. This new version offers improved performance across multiple languages. Additionally, they plan to integrate Whisper v3 into their API in the near future. The Consistency Decoder, an open-source replacement for the Stable Diffusion VAE decoder, is now available. This decoder enhances the quality of generated images, particularly for text, faces, and straight lines.